RH236 glusterfs存储配置

主机规划

其中前四个节点为配置glusterfs 分布式、复制、分布+复制、Geo异地同步等。其中前四个节点用于几种类型存储配置,第五台主机用作client访问、和Geo异常容灾模拟。需要注意的是,如下所有的配置中,我使用的都是IP,现网中建议使用主机名或域名的方式配置,这个IP发生变更时,无需在glusterfs中进行修改,只在dns中修改下指向IP即可。

| 主机名 | IP地址 | |

|---|---|---|

| node1 | server2-a.example.com | 172.25.2.10 |

| node2 | server2-b.example.com | 172.25.2.11 |

| node3 | server2-c.example.com | 172.25.2.12 |

| node4 | server2-d.example.com | 172.25.2.13 |

| node5 | server2-e.example.com | 172.25.2.14 |

1[root@server2-a ~]# gluster

2gluster> peer probe 172.25.2.10

3peer probe: success. Probe on localhost not needed

4gluster> peer probe 172.25.2.11

5peer probe: success.

6gluster> peer probe 172.25.2.12

7peer probe: success.

8gluster> peer probe 172.25.2.13

9peer probe: success.

10gluster>

1[root@server2-a ~]# mkdir -p /bricks/test

2[root@server2-a ~]# mkdir -p /bricks/data

3[root@server2-a ~]# vgs

4 VG #PV #LV #SN Attr VSize VFree

5 vg_bricks 1 0 0 wz--n- 14.59g 14.59g

6[root@server2-a ~]# lvcreate -L 13G -T vg_bricks/brickspool

7 Logical volume "lvol0" created

8 Logical volume "brickspool" created

9[root@server2-a ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_a1

10 Logical volume "brick_a1" created

11[root@server2-a ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_a2

12 Logical volume "brick_a2" created

13[root@server2-a ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_a1

14[root@server2-a ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_a2

15[root@server2-a ~]# cat /etc/fstab |grep -v \#

16UUID=0cad9910-91e8-4889-8764-fab83b8497b9 / ext4 defaults 1 1

17UUID=661c5335-6d03-4a7b-a473-a842b833f995 /boot ext4 defaults 1 2

18UUID=b37f001b-5ef3-4589-b813-c7c26b4ac2af swap swap defaults 0 0

19tmpfs /dev/shm tmpfs defaults 0 0

20devpts /dev/pts devpts gid=5,mode=620 0 0

21sysfs /sys sysfs defaults 0 0

22proc /proc proc defaults 0 0

23/dev/vg_bricks/brick_a1 /bricks/test/ xfs defaults 0 0

24/dev/vg_bricks/brick_a2 /bricks/data/ xfs defaults 0 0

25[root@server2-a ~]# mount -a

26[root@server2-a ~]# df -h

27[root@server2-a ~]# mkdir -p /bricks/test/testvol_n1

28[root@server2-a ~]# mkdir -p /bricks/data/datavol_n1

1[root@server2-b ~]# mkdir -p /bricks/test

2[root@server2-b ~]# mkdir -p /bricks/data

3[root@server2-b ~]# lvcreate -L 13G -T vg_bricks/brickspool

4[root@server2-b ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_b1

5[root@server2-b ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_b2

6[root@server2-b ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_b1

7[root@server2-b ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_b2

8/dev/vg_bricks/brick_b1 /bricks/test/ xfs defaults 0 0

9/dev/vg_bricks/brick_b2 /bricks/data/ xfs defaults 0 0

10[root@server2-b ~]# mount -a

11[root@server2-b ~]# df -h

12[root@server2-b ~]# mkdir -p /bricks/test/testvol_n2

13[root@server2-b ~]# mkdir -p /bricks/data/datavol_n2

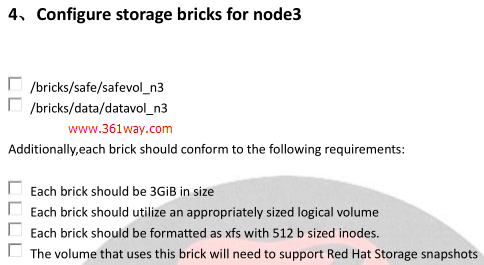

1[root@server2-c ~]# mkdir -p /bricks/safe

2[root@server2-c ~]# mkdir -p /bricks/data

3[root@server2-c ~]# lvcreate -L 13G -T vg_bricks/brickspool

4[root@server2-c ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_c1

5[root@server2-c ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_c2

6[root@server2-c ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_c1

7[root@server2-c ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_c2

8/dev/vg_bricks/brick_c1 /bricks/safe/ xfs defaults 0 0

9/dev/vg_bricks/brick_c2 /bricks/data/ xfs defaults 0 0

10[root@server2-c ~]# mount -a

11[root@server2-c ~]# df -h

12[root@server2-c ~]# mkdir -p /bricks/safe/safevol_n3

13[root@server2-c ~]# mkdir -p /bricks/data/datavol_n3

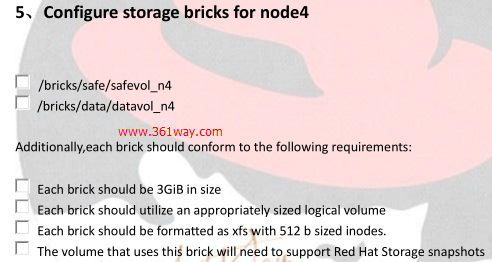

1[root@server2-d ~]# mkdir -p /bricks/safe

2[root@server2-d ~]# mkdir -p /bricks/data

3[root@server2-d ~]# lvcreate -L 13G -T vg_bricks/brickspool

4[root@server2-d ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_d1

5[root@server2-d ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_d2

6[root@server2-d ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_d1

7[root@server2-d ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_d2

8/dev/vg_bricks/brick_d1 /bricks/safe/ xfs defaults 0 0

9/dev/vg_bricks/brick_d2 /bricks/data/ xfs defaults 0 0

10[root@server2-d ~]# mount -a

11[root@server2-d ~]# df -h

12[root@server2-d ~]# mkdir -p /bricks/safe/safevol_n4

13[root@server2-d ~]# mkdir -p /bricks/data/datavol_n4

1[root@server2-a ~]# gluster

2gluster> volume create testvol 172.25.2.10:/bricks/test/testvol_n1 172.25.2.11:/bricks/test/testvol_n2

3volume create: testvol: success: please start the volume to access data

4gluster> volume start testvol

5volume start: testvol: success

6gluster> volume set testvol auth.allow 172.25.2.*

7gluster> volume list

8gluster> volume info testvol

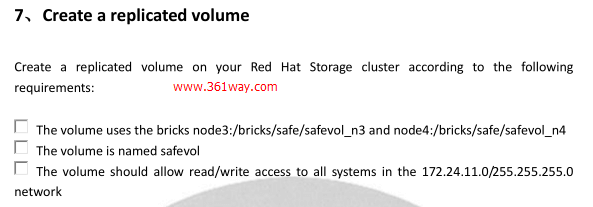

1[root@server2-a ~]# gluster

2gluster> volume create safevol replica 2 172.25.2.12:/bricks/safe/safevol_n3 172.25.2.13:/bricks/safe/safevol_n4

3gluster> volume start safevol

4gluster> volume set testvol auth.allow 172.25.2.*

5gluster> volume list

6gluster> volume info safevol

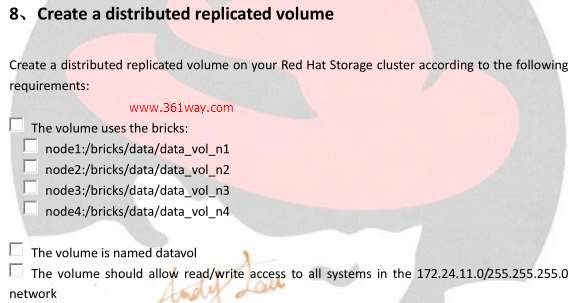

1[root@server2-a ~]# gluster

2gluster> volume create datavol replica 2 172.25.2.10:/bricks/data/datavol_n4 172.25.2.11:/bricks/data/datavol_n4 172.25.2.12:/bricks/data/datavol_n4 172.25.2.13:/bricks/data/datavol_n4

3gluster> volume start datavol

4gluster> volume set testvol auth.allow 172.25.2.*

5gluster> volume list

6gluster> volume info datavol

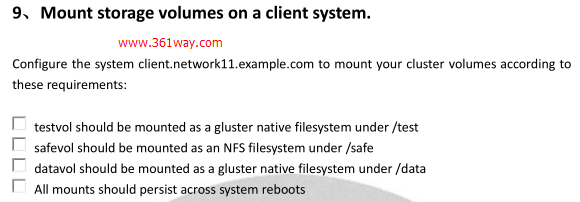

1[root@server2-e ~]# cat /etc/fstab |grep -v \#

2UUID=0cad9910-91e8-4889-8764-fab83b8497b9 / ext4 defaults 1 1

3UUID=661c5335-6d03-4a7b-a473-a842b833f995 /boot ext4 defaults 1 2

4UUID=b37f001b-5ef3-4589-b813-c7c26b4ac2af swap swap defaults 0 0

5tmpfs /dev/shm tmpfs defaults 0 0

6devpts /dev/pts devpts gid=5,mode=620 0 0

7sysfs /sys sysfs defaults 0 0

8proc /proc proc defaults 0 0

9172.25.2.10:/testvol /test glusterfs _netdev,acl 0 0

10172.25.2.10:/safevol /safe nfs _netdev 0 0

11172.25.2.10:/datavol /data glusterfs _netdev 0 0

12[root@server2-e ~]# mkdir /test /safe /data

13[root@server2-e ~]# mount -a

14[root@server2-e ~]# df -h

1[root@server2-e ~]# mkdir -p /test/confidential

2[root@server2-e ~]# groupadd admins

3[root@server2-e ~]# chgrp admins /test/confidential/

4[root@server2-e ~]# useradd suresh

5[root@server2-e ~]# cd /test/

6[root@server2-e test]# setfacl -m u:suresh:rwx /test/confidential/

7[root@server2-e test]# setfacl -m d:u:suresh:rwx /test/confidential/

8[root@server2-e test]# useradd anita

9[root@server2-e test]# setfacl -m u:anita:rx /test/confidential/

10[root@server2-e test]# setfacl -m d:u:anita:rx /test/confidential/

11[root@server2-e test]# chmod o-rx /test/confidential/

1[root@server2-e ~]# mkdir -p /safe/mailspool

2[root@server2-a ~]# gluster

3gluster> volume

4gluster> volume quota safevol enable

5gluster> volume quota safevol limit-usage /mailspool 192MB

6gluster> volume quota safevol list

7[root@server2-e ~]# chmod o+w /safe/mailspool

1[root@server2-e ~]# lvcreate -L 13G -T vg_bricks/brickspool

2[root@server2-e ~]# lvcreate -V 8G -T vg_bricks/brickspool -n slavebrick1

3[root@server2-e ~]# mkfs.xfs -i size=512 /dev/vg_bricks/slavebrick1

4[root@server2-e ~]# mkdir -p /bricks/slavebrick1

5[root@server2-e ~]# vim /etc/fstab

6/dev/vg_bricks/slavebrick1 /bricks/slavebrick1 xfs defaults 0 0

7[root@server2-e ~]# mount -a

8[root@server2-e ~]# mkdir -p /bricks/slavebrick1/brick

9[root@server2-e ~]# gluster volume create testrep 172.25.2.14:/bricks/slavebrick1/brick/

10[root@server2-e ~]# gluster volume start testrep

11[root@server2-e ~]# groupadd repgrp

12[root@server2-e ~]# useradd georep -G repgrp

13[root@server2-e ~]# passwd georep

14[root@server2-e ~]# mkdir -p /var/mountbroker-root

15[root@server2-e ~]# chmod 0711 /var/mountbroker-root/

16[root@server2-e ~]# cat /etc/glusterfs/glusterd.vol

17volume management

18 type mgmt/glusterd

19 option working-directory /var/lib/glusterd

20 option transport-type socket,rdma

21 option transport.socket.keepalive-time 10

22 option transport.socket.keepalive-interval 2

23 option transport.socket.read-fail-log off

24 option ping-timeout 0

25# option base-port 49152

26 option rpc-auth-allow-insecure on

27 option mountbroker-root /var/mountbroker-root/

28 option mountbroker-geo-replication.georep testrep

29 option geo-replication-log-group repgrp

30end-volume

31[root@server2-e ~]# /etc/init.d/glusterd restart

32[root@server2-a ~]# ssh-keygen

33[root@server2-a ~]# ssh-copy-id [email protected]

34[root@server2-a ~]# ssh [email protected]

35[root@server2-a ~]# gluster system:: execute gsec_create

36[root@server2-a ~]# gluster volume geo-replication testvol [email protected]::testrep create push-pem

37[root@server2-e ~]# sh /usr/libexec/glusterfs/set_geo_rep_pem_keys.sh georep testvol testrep

38[root@server2-a ~]# gluster volume geo-replication testvol [email protected]::testrep start

39[root@server2-a ~]# gluster volume geo-replication testvol [email protected]::testrep status

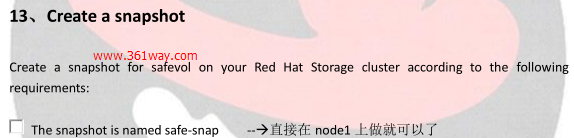

1[root@server2-a ~]# gluster snapshot create safe-snap safevol

捐赠本站(Donate)

如您感觉文章有用,可扫码捐赠本站!(If the article useful, you can scan the QR code to donate))

如您感觉文章有用,可扫码捐赠本站!(If the article useful, you can scan the QR code to donate))

- Author: shisekong

- Link: https://blog.361way.com/glusterfs-config/5040.html

- License: This work is under a 知识共享署名-非商业性使用-禁止演绎 4.0 国际许可协议. Kindly fulfill the requirements of the aforementioned License when adapting or creating a derivative of this work.