LVS高可用(六)LVS+keepalived主从

在之前的篇幅中对LVS及keepalived都分别做了介绍,本篇开始总结下LVS+keepalived组合方案。这里以LVS的DR模式为例,在LB层再实现HA的功能 。具体加架如下图:

一、IP及规划

| 主机 | IP地址 |

|---|---|

| realserver | 192.168.122.10 |

| realserver | 192.168.122.20 |

| director | 192.168.122.30 |

| director | 192.168.122.40 |

| VIP | 192.168.122.100 |

两台realserver安装apache httpd(yum -y install httpd)

两台director主机安装ipvsadm 、keepalived(yum -y install ipvsadm keepalived)

VIP需要配置在两台director上的eth0网口上,同时需要将该IP配置在两台realserver的lo回环口上。

在之前的篇幅中也介绍过,keepalived底层有关于IPVS的功能模块,可以直接在其配置文件中实现LVS的配置,不需要通过ipvsadm命令再单独配置。

二、director主机配置

MASTER节点的keepalived配置文件如下:

1# cat /etc/keepalived/keepalived.conf

2global_defs {

3router_id LVS_T1

4}

5vrrp_sync_group bl_group {

6group {

7 bl_one

8}

9}

10vrrp_instance bl_one {

11 state MASTER

12 interface eth0

13 lvs_sync_daemon_interface eth0

14 virtual_router_id 38

15 priority 150

16 advert_int 3

17 authentication {

18 auth_type PASS

19 auth_pass 1111

20 }

21 virtual_ipaddress {

22 192.168.122.100

23 }

24}

25virtual_server 192.168.122.100 80 {

26 delay_loop 3

27 lb_algo rr

28 lb_kind DR

29 persistence_timeout 1

30 protocol TCP

31 real_server 192.168.122.10 80 {

32 weight 1

33 TCP_CHECK {

34 connect_timeout 10

35 nb_get_retry 3

36 delay_before_retry 3

37 connect_port 80

38 }

39 }

40 real_server 192.168.122.20 80 {

41 weight 1

42 TCP_CHECK {

43 connect_timeout 10

44 nb_get_retry 3

45 delay_before_retry 3

46 connect_port 80

47 }

48 }

49}

BACKUP director主机的配置文件如下:

1# cat /etc/keepalived/keepalived.conf

2global_defs {

3router_id LVS_T1

4}

5vrrp_sync_group bl_group {

6group {

7 bl_one

8}

9}

10vrrp_instance bl_one {

11 state MASTER

12 interface eth0

13 lvs_sync_daemon_interface eth0

14 virtual_router_id 38

15 priority 150

16 advert_int 3

17 authentication {

18 auth_type PASS

19 auth_pass 1111

20 }

21 virtual_ipaddress {

22 192.168.122.100

23 }

24}

25virtual_server 192.168.122.100 80 {

26 delay_loop 3

27 lb_algo rr

28 lb_kind DR

29 persistence_timeout 1

30 protocol TCP

31 real_server 192.168.122.10 80 {

32 weight 1

33 TCP_CHECK {

34 connect_timeout 10

35 nb_get_retry 3

36 delay_before_retry 3

37 connect_port 80

38 }

39 }

40 real_server 192.168.122.20 80 {

41 weight 1

42 TCP_CHECK {

43 connect_timeout 10

44 nb_get_retry 3

45 delay_before_retry 3

46 connect_port 80

47 }

48 }

49}

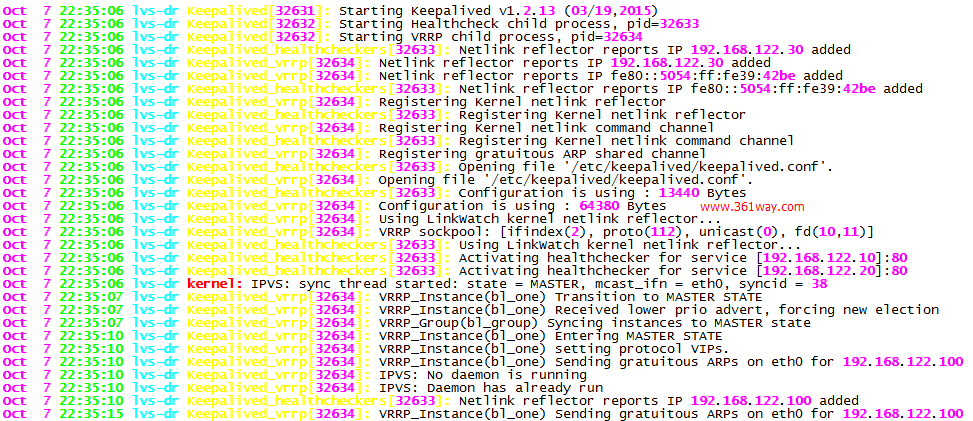

从上面的配置可以看到,这里配置的健康检测方式是基于IP端口的,我们也可憎修改成基于URL的。这点可以参考 keepalived健康检查方式 。主机在启动keepalived服务时,可以从message中看到如下日志:

三、realserver主机配置

两台realserver上使用的脚本一样,内容如下:

1# cat dr_client.sh

2#!/bin/bash

3VIP=192.168.122.100

4BROADCAST=192.168.122.255 #vip's broadcast

5. /etc/rc.d/init.d/functions

6case "$1" in

7 start)

8 echo "reparing for Real Server"

9 echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

10 echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

11 echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

12 echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

13 ifconfig lo:0 $VIP netmask 255.255.255.255 broadcast $BROADCAST up

14 /sbin/route add -host $VIP dev lo:0

15 ;;

16 stop)

17 ifconfig lo:0 down

18 echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

19 echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

20 echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

21 echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

22 ;;

23 *)

24 echo "Usage: lvs {start|stop}"

25 exit 1

26esac

四、测试

分别在两台主机上执行如下命令启动:

1# /etc/init.d/keepalived start

2# sh dr_client.sh start

在两台realserver上启动httpd服务,关闭防火墙(或放行80端口)。可以使用如下脚本进行访问测试:

1#!/bin/sh

2for((i=1;i<=100;i++));do

3 curl http://192.168.122.100 >> /tmp/q;

4done

1、简单测试

在任一台director主机上使用ipvsadm命令观察:

1[root@lvs-dr ~]# ipvsadm -Ln

2IP Virtual Server version 1.2.1 (size=4096)

3Prot LocalAddress:Port Scheduler Flags

4 -> RemoteAddress:Port Forward Weight ActiveConn InActConn

5TCP 192.168.122.100:80 rr persistent 1

6 -> 192.168.122.10:80 Route 1 0 0

7 -> 192.168.122.20:80 Route 1 0 100

注:这里会现一个问题,发现使用轮询算法,使用一台client得到的结果是不均衡的(在一定的时间内其一直走其中一台realserver) 。当使用多台client去访问时,发现访问的结果是均衡的。

2、realserver故障测试

将其中一台realserver主机关闭,通过curl 查看发现其会每次请求获取的都是正常的主机的页面,message中也能看到如下日志:

1Oct 7 22:35:10 lvs-dr Keepalived_vrrp[32634]: IPVS: Daemon has already run

2Oct 7 22:35:10 lvs-dr Keepalived_healthcheckers[32633]: Netlink reflector reports IP 192.168.122.100 added

3Oct 7 22:35:15 lvs-dr Keepalived_vrrp[32634]: VRRP_Instance(bl_one) Sending gratuitous ARPs on eth0 for 192.168.122.100

4Oct 7 22:38:16 lvs-dr Keepalived_healthcheckers[32633]: TCP connection to [192.168.122.10]:80 failed !!!

5Oct 7 22:38:16 lvs-dr Keepalived_healthcheckers[32633]: Removing service [192.168.122.10]:80 from VS [192.168.122.100]:80

这台realserver恢复后,message中的日志如下:

1Oct 7 22:39:19 lvs-dr Keepalived_healthcheckers[32633]: TCP connection to [192.168.122.10]:80 success.

2Oct 7 22:39:19 lvs-dr Keepalived_healthcheckers[32633]: Adding service [192.168.122.10]:80 to VS [192.168.122.100]:80

3、director主机故障测试

通过关闭master director主机的keepalived服务,在backup director主机上发现日志如下:

1Oct 7 22:40:14 lvs-dr Keepalived_vrrp[1601]: VRRP_Instance(bl_one) Transition to MASTER STATE

2Oct 7 22:40:14 lvs-dr Keepalived_vrrp[1601]: VRRP_Group(bl_group) Syncing instances to MASTER state

3Oct 7 22:40:17 lvs-dr Keepalived_vrrp[1601]: VRRP_Instance(bl_one) Entering MASTER STATE

4Oct 7 22:40:17 lvs-dr Keepalived_vrrp[1601]: VRRP_Instance(bl_one) setting protocol VIPs.

5Oct 7 22:40:17 lvs-dr Keepalived_vrrp[1601]: VRRP_Instance(bl_one) Sending gratuitous ARPs on eth0 for 192.168.122.100

6Oct 7 22:40:17 lvs-dr Keepalived_healthcheckers[1600]: Netlink reflector reports IP 192.168.122.100 added

此时通过122.100访问服务不受影响 。

捐赠本站(Donate)

如您感觉文章有用,可扫码捐赠本站!(If the article useful, you can scan the QR code to donate))

如您感觉文章有用,可扫码捐赠本站!(If the article useful, you can scan the QR code to donate))

- Author: shisekong

- Link: https://blog.361way.com/lvs-keepalived-dr-master-backup/5221.html

- License: This work is under a 知识共享署名-非商业性使用-禁止演绎 4.0 国际许可协议. Kindly fulfill the requirements of the aforementioned License when adapting or creating a derivative of this work.