MCP多云跨集群pod互访

一、需求(requirement)

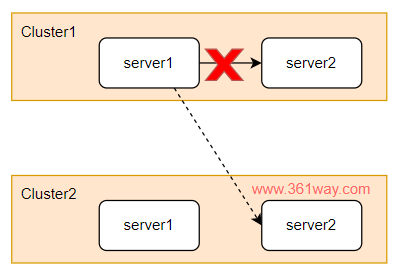

最近遇到的客户需求:需要在不同的云服务提供商的k8s上运行容器服务,平时server1访问server2时,使用本集群上的服务,但当本集群上的server2出现异常时,cluster1上的server1可以连接cluster2上的server2服务。

Recently encountered customer requirements: It is necessary to run container services on k8s of different cloud service providers. Usually, when server1 accesses server2, it uses the services on the same cluster, but when server2 on this cluster is abnormal, server1 on cluster1 can be connected The server2 on cluster2.

这个需求的关键就在于如何让不同region的集群实现pod互访,同时服务信息发生变化时,对端如何及时获取变化信息。

The key of this requirement is how to allow clusters in different regions to access between pods, and how to obtain the change information in time when the service information changes.

IP网段信息(IP network segment information)

| cluster | Node network | pod network |

|---|---|---|

| brazil | 192.168.34.0/24 | 172.16.0.0/16 |

| chile | 192.168.4.0/23 | 10.0.0.0/16 |

由于该需求的实现需要将brazil集群和chile集群间的node网段和pod网络打通。所以这里要保证node网络和pod网络是不能重叠冲突的。

Because implement this requirement, the node network segment and the pod network segment between the brazil cluster and the chile cluster need to be connected. Therefore, it is necessary to ensure that the node network and the pod network cannot overlap and conflict.

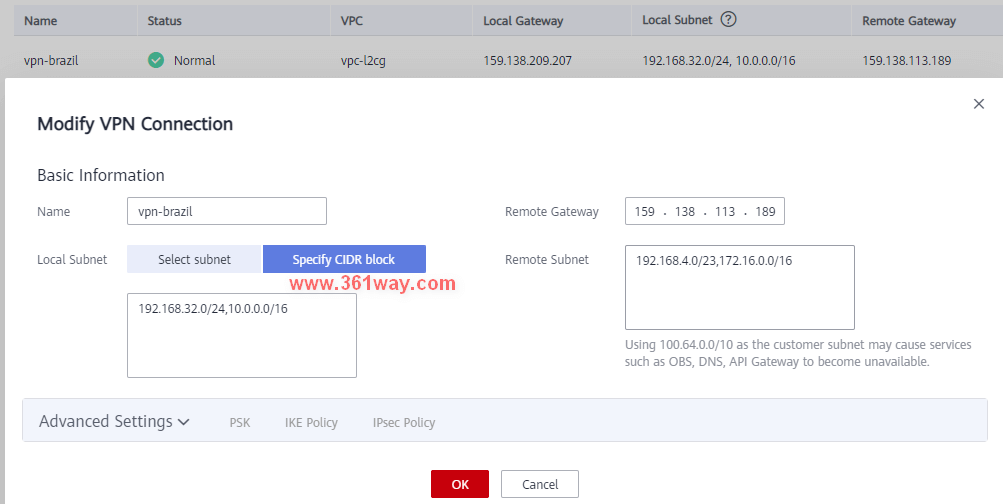

二、VPN网段打通(VPN network segment connect)

下图是华为云上VPN的配置,由于VPN的配置比较简单,这里只列下主要的配置信息,具体步骤不再提供。通过该配置,可以实现巴西和智利node和pod网络之间的互通。

The following figure shows the configuration of VPN on HUAWEI CLOUD. Since the configuration of VPN is relatively simple, only the main configuration information is listed here, and the specific steps are not provided. Through this configuration, the intercommunication between the node and pod networks in Brazil and Chile can be achieved.

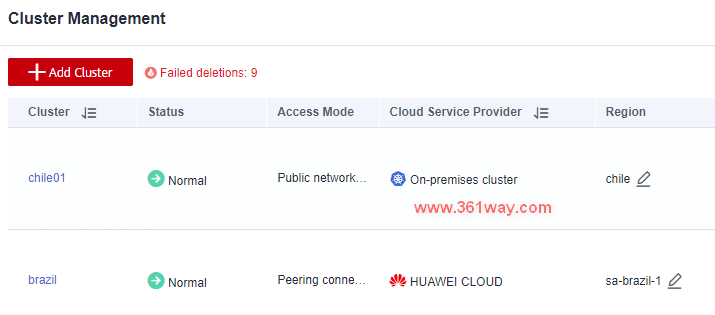

三、MCP集群配置(MCP cluster configuration)

MCP的配置这里也不再详细赘述。因为这里使用的是huweicloud 巴西region的MCP云服务,所以巴西CCE集群通过内部对等连接接入,智利的CCE集群通过公网apiserver连接。

The configuration of MCP will not be described in detail. because there is used MCP cloud service of the huweicloud Brazil region, so the Brazil CCE cluster is connected through an internal peering connection, and the Chile CCE cluster is connected through the k8s public network apiserver.

使用命令行获取集群信息(Get the MCP information of clusters)

1[root@brazil-11627 ~]# kubectl --kubeconfig=/root/mcp.json get clusters

2NAME VERSION MODE READY AGE

3brazil v1.21.4-r0-CCE22.5.1 Push True 3d1h

4chile01 v1.21.4-r0-CCE22.5.1 Push True 3d

四、集群导出导入(Cluster export import)

MCP的web界面主要提供的是多集群管理功能,服务的导出和导入功能只能通过api去调用,无法通过web界面进行配置,这部分功能可以参看karmda的文档 https://karmada.io/docs/userguide/service/multi-cluster-service/

The web interface of MCP mainly provides multi-cluster management functions. The export and import functions of services can only be invoked through api and cannot be configured through the web interface. For this function, please refer to the documentation of karmda https://karmada.io/docs/userguide/service/multi-cluster-service/

1. 创建CRD策略(create CRD policy)

1[root@brazil-11627 xsy]# cat propagate-mcs-crd.yaml

2# propagate ServiceExport CRD

3apiVersion: policy.karmada.io/v1alpha1

4kind: ClusterPropagationPolicy

5metadata:

6 name: serviceexport-policy

7spec:

8 resourceSelectors:

9 - apiVersion: apiextensions.k8s.io/v1

10 kind: CustomResourceDefinition

11 name: serviceexports.multicluster.x-k8s.io

12 placement:

13 clusterAffinity:

14 clusterNames:

15 - brazil

16 - chile01

17---

18# propagate ServiceImport CRD

19apiVersion: policy.karmada.io/v1alpha1

20kind: ClusterPropagationPolicy

21metadata:

22 name: serviceimport-policy

23spec:

24 resourceSelectors:

25 - apiVersion: apiextensions.k8s.io/v1

26 kind: CustomResourceDefinition

27 name: serviceimports.multicluster.x-k8s.io

28 placement:

29 clusterAffinity:

30 clusterNames:

31 - brazil

32 - chile01

k8s集群想要实现服务的导出和导入功能,就需要在集群里配置对应的CRD策略,这里执行的yaml文件就是实现该功能,可以把智利集群上的服务可以导入到巴西集群上去。

If the k8s cluster wants to implement the export and import functions, it needs to configure the corresponding CRD policy in the clusters. The yaml file executed here is to implement this function, Chile cluster can be export k8s service to the Brazil cluster.

1[root@brazil-11627 xsy]# kubectl --kubeconfig=/root/mcp.json apply -f propagate-mcs-crd.yaml

2clusterpropagationpolicy.policy.karmada.io/serviceexport-policy created

3clusterpropagationpolicy.policy.karmada.io/serviceimport-policy created

2. 部署应用在chile01集群(Deploy application in the chile01 cluster)

这个应用可以通过curl访问,其会返回Node和POD信息。

This application can be accessed through curl, They returns Node and POD information.

1[root@brazil-11627 xsy]# cat demo-serve.yaml

2apiVersion: apps/v1

3kind: Deployment

4metadata:

5 name: serve

6spec:

7 replicas: 1

8 selector:

9 matchLabels:

10 app: serve

11 template:

12 metadata:

13 labels:

14 app: serve

15 spec:

16 containers:

17 - name: serve

18 image: jeremyot/serve:0a40de8

19 args:

20 - "--message='hello from cluster 1 (Node: {{env \"NODE_NAME\"}} Pod: {{env \"POD_NAME\"}} Address: {{addr}})'"

21 env:

22 - name: NODE_NAME

23 valueFrom:

24 fieldRef:

25 fieldPath: spec.nodeName

26 - name: POD_NAME

27 valueFrom:

28 fieldRef:

29 fieldPath: metadata.name

30---

31apiVersion: v1

32kind: Service

33metadata:

34 name: serve

35spec:

36 ports:

37 - port: 80

38 targetPort: 8080

39 selector:

40 app: serve

41---

42apiVersion: policy.karmada.io/v1alpha1

43kind: PropagationPolicy

44metadata:

45 name: mcs-workload

46spec:

47 resourceSelectors:

48 - apiVersion: apps/v1

49 kind: Deployment

50 name: serve

51 - apiVersion: v1

52 kind: Service

53 name: serve

54 placement:

55 clusterAffinity:

56 clusterNames:

57 - chile01

58

59[root@brazil-11627 xsy]# kubectl --kubeconfig=/root/mcp.json apply -f demo-serve.yaml

60deployment.apps/serve created

61service/serve created

62propagationpolicy.policy.karmada.io/mcs-workload created

3. 导出chile01集群服务(export chile01 cluster service)

1[root@brazil-11627 xsy]# cat export-service.yaml

2apiVersion: multicluster.x-k8s.io/v1alpha1

3kind: ServiceExport

4metadata:

5 name: serve

6---

7apiVersion: policy.karmada.io/v1alpha1

8kind: PropagationPolicy

9metadata:

10 name: serve-export-policy

11spec:

12 resourceSelectors:

13 - apiVersion: multicluster.x-k8s.io/v1alpha1

14 kind: ServiceExport

15 name: serve

16 placement:

17 clusterAffinity:

18 clusterNames:

19 - chile01

20

21[root@brazil-11627 xsy]# kubectl --kubeconfig=/root/mcp.json apply -f export-service.yaml

22serviceexport.multicluster.x-k8s.io/serve created

23propagationpolicy.policy.karmada.io/serve-export-policy created

导出信息会记录到MCP(karmada)的数据库里。The export information will be recorded in the MCP (karmada) database.

4. 导入服务信息到brazil集群(Import service information to brazil cluster)

1[root@brazil-11627 xsy]# cat import-service.yaml

2apiVersion: multicluster.x-k8s.io/v1alpha1

3kind: ServiceImport

4metadata:

5 name: serve

6spec:

7 type: ClusterSetIP

8 ports:

9 - port: 80

10 protocol: TCP

11---

12apiVersion: policy.karmada.io/v1alpha1

13kind: PropagationPolicy

14metadata:

15 name: serve-import-policy

16spec:

17 resourceSelectors:

18 - apiVersion: multicluster.x-k8s.io/v1alpha1

19 kind: ServiceImport

20 name: serve

21 placement:

22 clusterAffinity:

23 clusterNames:

24 - brazil

25

26[root@brazil-11627 xsy]# kubectl --kubeconfig=/root/mcp.json apply -f import-service.yaml

27serviceimport.multicluster.x-k8s.io/serve created

28propagationpolicy.policy.karmada.io/serve-import-policy created

注意:在创建ServiceImport资源时,如果待导入的service在端口声明中指定了端口名称,ServiceImport资源也需要指明端口名称。

Note: When creating a ServiceImport resource, if the service to be imported specifies a port name in the port declaration, the ServiceImport resource also needs to specify the port name.

五、验证(verify)

当我们执行完上面的操作,在brazil上会生成一个带derived-前缀的服务,服务名称的后半部分会和chile01的一样。When we perform the above operations, a service with the derived- prefix will be generated on brazil, and the second half of the service name will be the same as that of chile01.

1[root@brazil-11627 xsy]# kubectl --kubeconfig=/root/chile.json get svc

2NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

3kubernetes ClusterIP 10.247.0.1 <none> 443/TCP 3d1h

4serve ClusterIP 10.247.132.154 <none> 80/TCP 14m

5[root@brazil-11627 xsy]# kubectl --kubeconfig=/root/brazil.json get svc

6NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

7derived-serve ClusterIP 10.247.79.131 <none> 80/TCP 7m19s

8kubernetes ClusterIP 10.247.0.1 <none> 443/TCP 3d1h

9nginx ClusterIP 10.247.98.88 <none> 80/TCP 3d1h

10[root@brazil-11627 xsy]# kubectl --kubeconfig=/root/brazil.json get endpointslices

11NAME ADDRESSTYPE PORTS ENDPOINTS AGE

12imported-chile01-serve-2rncz IPv4 8080 172.16.0.5 7m22s

13imported-chile01-serve-k9qrq IPv4 8080 172.16.0.13 23h

14kubernetes IPv4 5444 192.168.32.92 3d1h

15nginx-282dt IPv4 80 10.0.0.5 3d1h

16</none></none></none></none></none>

也可以通过查看iptables的信息,会发现增加了DNAT记录(You can also view iptables information, You will find that DNAT records have been added):

1[root@brazil-11627 ~]# iptables-save |grep derived-serve

2-A KUBE-SEP-EB5BNMBADBON6GCK -s 172.16.0.5/32 -m comment --comment "default/derived-serve" -j KUBE-MARK-MASQ

3-A KUBE-SEP-EB5BNMBADBON6GCK -p tcp -m comment --comment "default/derived-serve" -m tcp -j DNAT --to-destination 172.16.0.5:8080

4-A KUBE-SERVICES -d 10.247.79.131/32 -p tcp -m comment --comment "default/derived-serve cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

5-A KUBE-SERVICES -d 10.247.79.131/32 -p tcp -m comment --comment "default/derived-serve cluster IP" -m tcp --dport 80 -j KUBE-SVC-AONYAOU5JJUPIL5D

6-A KUBE-SVC-AONYAOU5JJUPIL5D -m comment --comment "default/derived-serve" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-AV6OKBG5RYJ47XCG

7-A KUBE-SVC-AONYAOU5JJUPIL5D -m comment --comment "default/derived-serve" -j KUBE-SEP-EB5BNMBADBON6GCK

8[root@brazil-11627 ~]# kubectl --kubeconfig=/root/chile.json get endpoints

9NAME ENDPOINTS AGE

10kubernetes 192.168.5.1:5444 3d2h

11serve 172.16.0.5:8080 27m

12test-service 172.16.0.3:5444 3d1h

如果不是使用的iptables,而是使用的ipvs,效果也是一样的,因为karmada会调用kube-proxy,修改对应的路由记录。

If you use ipvs instead of iptables, the effect is the same, because karmada will call kube-proxy to modify the corresponding routing records.

1[root@brazil-11627 ~]# kubectl --kubeconfig=/root/brazil.json run -i --rm --restart=Never --image=jeremyot/request:0a40de8 request -- --duration=5s --address=derived-serve

2If you don't see a command prompt, try pressing enter.

32022/08/19 16:41:34 'hello from cluster 1 (Node: 192.168.5.72 Pod: serve-645cb7c7f5-vpn8w Address: 172.16.0.5)'

42022/08/19 16:41:35 'hello from cluster 1 (Node: 192.168.5.72 Pod: serve-645cb7c7f5-vpn8w Address: 172.16.0.5)'

52022/08/19 16:41:36 'hello from cluster 1 (Node: 192.168.5.72 Pod: serve-645cb7c7f5-vpn8w Address: 172.16.0.5)'

62022/08/19 16:41:37 'hello from cluster 1 (Node: 192.168.5.72 Pod: serve-645cb7c7f5-vpn8w Address: 172.16.0.5)'

7pod "request" deleted

8

9# add the pods number, you can test again

10[root@brazil-11627 ~]# kubectl --kubeconfig=/root/mcp.json scale deployment/serve --replicas=2

11[root@brazil-11627 ~]# kubectl --kubeconfig=/root/brazil.json run -i --rm --restart=Never --image=jeremyot/request:0a40de8 request -- --duration=5s --address=derived-serve

六、资源删除(Resource deletion)

1[root@brazil-11627 xsy]# cat cleanmcp.sh

2kubectl --kubeconfig=/root/mcp.json delete -f import-service.yaml

3kubectl --kubeconfig=/root/mcp.json delete -f export-service.yaml

4kubectl --kubeconfig=/root/mcp.json delete -f demo-serve.yaml

5kubectl --kubeconfig=/root/mcp.json delete -f propagate-mcs-crd.yaml

删除策略当前建议使用API的方式处理,因为在MCP界面上看到的资源删除干净了,而实际上可能在后台还未删除干净,这个是web界面自身功能的问题,因为在MCP界面上并未把这个导入导出功能实现,所以界面上看不到也是正常的。

The deletion policy is currently recommended to use the API method, because the resources seen on the MCP interface have been deleted, but in fact, they may have not delete in the background. This is a problem with the function of the web interface, because import and export function not on the MCP interface , so it is normal to not see it on the web interface.

加上英语部分写作真TM累啊,没办法,遇到的很多case需要英文交流,逼自己一把吧。最后感谢karmada的开发人员,本篇内容的实现,karmada的开发人员给了不少耐心的帮助,在和karmada开发人员的交流过程中,也学到了不少东西。

最后,由于MCP的核心功能后并到UCS里,所以MCP这个云服务会逐渐被UCS的功能覆盖,希望能在国外的region尽要能体验UCS服务。

捐赠本站(Donate)

如您感觉文章有用,可扫码捐赠本站!(If the article useful, you can scan the QR code to donate))

如您感觉文章有用,可扫码捐赠本站!(If the article useful, you can scan the QR code to donate))

- Author: shisekong

- Link: https://blog.361way.com/mcp-multi-pod-access/6873.html

- License: This work is under a 知识共享署名-非商业性使用-禁止演绎 4.0 国际许可协议. Kindly fulfill the requirements of the aforementioned License when adapting or creating a derivative of this work.