Deploy, Scale and rollback an Application with Helm

Introduction

Containers have revolutionized application development and delivery on account of their ease of use, portability and consistency. And when it comes to automatically deploying and managing containers in the cloud (public, private or hybrid), one of the most popular options today is Kubernetes.

Kubernetes is an open source project designed specifically for container orchestration. Kubernetes offers a number of key features, including multiple storage APIs, container health checks, manual or automatic scaling, rolling upgrades and service discovery. Applications can be installed to a Kubernetes cluster via Helm charts, which provide streamlined package management functions.

Assumptions and Prerequisites

This guide focuses on deploying an example phpbb application in a Kubernetes cluster running on either Huaweicloud CCE or Minikube.

This guide makes the following assumptions:

- You have a Kubernetes 1.5.0 (or later) cluster.

- You have kubectl installed and configured to work with your Kubernetes cluster.

- You have git installed and configured.

- You have a basic understanding of how containers work.

Step 1: Deploy the example application

The smallest deployable unit in Kubernetes is a “pod”. A pod consists of one or more containers which can communicate and share data with each other. Pods make it easy to scale applications: scale up by adding more pods, scale down by removing pods. Learn more about pods.

To deploy the sample application using a Helm chart, follow these steps:

- Clone the Helm chart from Bitnami’s Github repository:

1git clone https://github.com/bitnami/charts.git

2cd charts/bitnami/phpbb

- Check for and install missing dependencies with helm dep. The Helm chart used in this example is dependent on the mariadb chart in the official repository, so the commands below will take care of identifying and installing the missing dependency.

1helm dep list

2helm dep update

3helm dep build

Here’s what you should see:

1[root@ccetest-87180 phpbb]# helm repo add bitnami https://charts.bitnami.com/bitnami

2"bitnami" has been added to your repositories

3

4[root@ccetest-87180 phpbb]# helm dep list

5lm dep update

6 helm dep buildNAME VERSION REPOSITORY STATUS

7mariadb 11.x.x https://charts.bitnami.com/bitnami missing

8common 2.x.x https://charts.bitnami.com/bitnami missing

9

10[root@ccetest-87180 phpbb]# helm dep update

11Hang tight while we grab the latest from your chart repositories...

12...Successfully got an update from the "prometheus-community" chart repository

13...Successfully got an update from the "bitnami" chart repository

14...Successfully got an update from the "stable" chart repository

15Update Complete. ⎈Happy Helming!⎈

16Saving 2 charts

17Downloading mariadb from repo https://charts.bitnami.com/bitnami

18Downloading common from repo https://charts.bitnami.com/bitnami

19Deleting outdated charts

20

21[root@ccetest-87180 phpbb]# helm dep build

22Hang tight while we grab the latest from your chart repositories...

23...Successfully got an update from the "prometheus-community" chart repository

24...Successfully got an update from the "bitnami" chart repository

25...Successfully got an update from the "stable" chart repository

26Update Complete. ⎈Happy Helming!⎈

27Saving 2 charts

28Downloading mariadb from repo https://charts.bitnami.com/bitnami

29Downloading common from repo https://charts.bitnami.com/bitnami

30Deleting outdated charts

- Lint the chart with helm lint to ensure it has no errors.

1helm lint .

- Deploy the Helm chart with helm install. Pay special attention to the NOTES section of the output, as it contains important information to access the application. we need to modify the

values.yamlfile set the storageclass tocsi-disk.

1[root@ccetest-87180 wordpress]# helm install myforum . --set service.type=NodePort

2NAME: myforum

3……

4

51. Access you phpBB instance with:

6 export NODE_PORT=$(kubectl get --namespace default -o jsonpath="{.spec.ports[0].nodePort}" services myforum-phpbb)

7 export NODE_IP=$(kubectl get nodes --namespace default -o jsonpath="{.items[0].status.addresses[0].address}")

8 echo "phpBB URL: http://$NODE_IP:$NODE_PORT/"

9

102. Login with the following credentials

11

12 echo Username: user

13 echo Password: $(kubectl get secret --namespace default myforum-phpbb -o jsonpath="{.data.phpbb-password}" | base64 -d)

If you can deploy use the LoadBalance, use the command below instead:

1helm install myblog . --set service.type=LoadBalancer

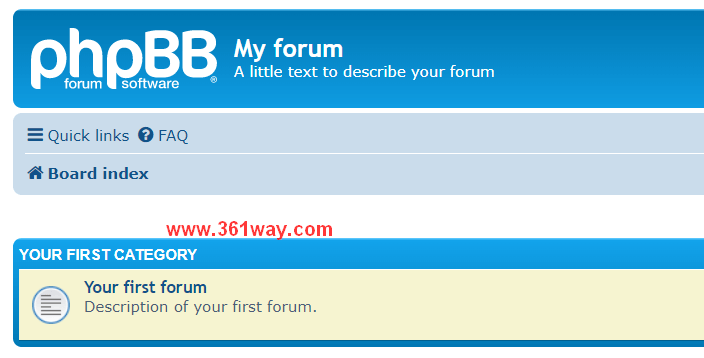

- Browse to the specified URL and you should see the sample application running. Here’s what it should look like:

- To debug and diagnose deployment problems, use kubectl get pods -l app=myblog. If you specified a different release name (or didn’t specify one), remember to use the actual release name from your deployment.

- To delete and reinstall the Helm chart at any time, use the helm delete command, shown below.

1helm delete myforum

Step 2: Explore Kubernetes and Helm

2.1 Scale up (or down)

For simplicity, this section focuses only on scaling the Node.js pod.

As more and more users access your application, it becomes necessary to scale up in order to handle the increased load. Conversely, during periods of low demand, it often makes sense to scale down to optimize resource usage.

Kubernetes provides the kubectl scale command to scale the number of pods in a deployment up or down. Learn more about the kubectl scale command.

Verify the number of pods currently running for each service with the helm status command, as shown below:

1helm status myforum

Then, scale the phpbb pod up to three copies using the kubectl scale command below.

1[root@ccetest-87180 phpbb]# kubectl scale --replicas 3 deployment/myforum-phpbb

2deployment.apps/myforum-phpbb scaled

Then, scale it back down to two using the command below:

1kubectl scale --replicas 2 deployment/myforum-phpbb

A key feature of Kubernetes is that it is a self-healing system: if one or more pods in a Kubernetes cluster are terminated unexpectedly, the cluster will automatically spin up replacements. This ensures that the required number of pods are always running at any given time.

To see this in action, use the kubectl get pods command to get a list of running pods, as shown below:

1[root@ccetest-87180 phpbb]# kubectl get pods |grep phpbb

2myforum-phpbb-f5447c564-gjt4n 1/1 Running 0 13m

3myforum-phpbb-f5447c564-jjk8v 1/1 Running 0 2m41s

As you can see, this cluster has been scaled up to have 2 phpbb pods. Now, select one of the pod and simulate a pod failure by deleting it with a command like the one below. Replace the POD-ID placeholder with an actual pod identifier from the output of the kubectl get pods command.

1kubectl delete pod POD-ID

Now, run kubectl get pods -w again and you will see that Kubernetes has instantly replaced the failed pod with a new one:

1[root@ccetest-87180 phpbb]# kubectl delete pod myforum-phpbb-f5447c564-gjt4n

2pod "myforum-phpbb-f5447c564-gjt4n" deleted

3[root@ccetest-87180 phpbb]# kubectl get pods |grep phpbb

4myforum-phpbb-f5447c564-h74h2 1/1 Running 0 97s

5myforum-phpbb-f5447c564-jjk8v 1/1 Running 0 5m30s

If you keep watching the output of kubectl get pods -w, you will see the state of the new pod change rapidly from “Pending” to “Running”.

2.2 Balance traffic between pods

It’s easy enough to spin up two (or more) replicas of the same pod, but how do you route traffic to them? When deploying an application to a Kubernetes cluster in the cloud, you have the option of automatically creating a cloud network load balancer (external to the Kubernetes cluster) to direct traffic between the pods. This load balancer is an example of a Kubernetes Service resource. Learn more about services in Kubernetes.

You’ve already seen a Kubernetes load balancer in action. When deploying the application to GKE with Helm, the command used the serviceType option to create an external load balancer, as shown below:

1helm install . myforum --set service.type=LoadBalancer

When invoked in this way, Kubernetes will not only create an external load balancer, but will also take care of configuring the load balancer with the internal IP addresses of the pods, setting up firewall rules, and so on. To see details of the load balancer service, use the kubectl describe svc command, as shown below:

1kubectl describe svc myforum

Notice the LoadBalancer Ingress field, which specifies the IP address of the load balancer, and the Endpoints field, which specifies the internal IP addresses of the three phpbb pods in use. Similarly, the Port field specifies the port that the load balancer will listen to for connections (in this case, 80, the standard Web server port) and the NodePort field specifies the port on the internal cluster node that the pod is using to expose the service.

Obviously, this doesn’t work quite the same way on a Minikube cluster running locally. Look back at the Minikube deployment and you’ll see that the serviceType option was set to NodePort. This exposes the service on a specific port on every node in the cluster.

1helm install . myforum --set service.type=NodePort

Verify this by checking the details of the service with kubectl describe svc:

1[root@ccetest-87180 phpbb]# kubectl describe svc myforum

2Name: myforum-phpbb

3Namespace: default

4……

5

6Type: NodePort

7IP: 10.247.244.200

8Port: http 80/TCP

9TargetPort: http/TCP

10NodePort: http 30693/TCP

11Endpoints: 172.16.0.17:8080,172.16.0.19:8080

12Port: https 443/TCP

13TargetPort: https/TCP

14NodePort: https 31578/TCP

15Endpoints: 172.16.0.17:8443,172.16.0.19:8443

16Session Affinity: None

17External Traffic Policy: Cluster

18Events: <none>

The main difference here is that instead of an external network load balancer service, Kubernetes creates a service that listens on each node for incoming requests and directs it to the static open port on each endpoint.

2.3 Perform rolling updates (and rollbacks)

Rolling updates and rollbacks are important benefits of deploying applications into a Kubernetes cluster. With rolling updates, devops teams can perform zero-downtime application upgrades, which is an important consideration for production environments. By the same token, Kubernetes also supports rollbacks, which enable easy reversal to a previous version of an application without a service outage. Learn more about rolling updates.

Helm makes it easy to upgrade applications with the helm upgrade command, as shown below:

1helm upgrade myforum .

Check upgrade status with the helm history command shown below:

1helm history myforum

Rollbacks are equally simple – just use the helm rollback command and specify the revision number to roll back to. For example, to roll back to the original version of the application (revision 1), use this command:

1helm rollback myforum 1

When you check the status with helm history, you will see that revision 2 will have been superseded by a copy of revision 1, this time labelled as revision 3.

1[root@ccetest-87180 phpbb]# helm history myforum

2REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

31 Tue Sep 28 00:53:57 2021 superseded phpbb-12.3.2 3.3.8 Install complete

42 Tue Sep 28 01:25:29 2021 deployed phpbb-12.3.2 3.3.8 Upgrade complete

5[root@ccetest-87180 phpbb]# helm rollback myforum 1

6Rollback was a success! Happy Helming!

7[root@ccetest-87180 phpbb]# helm history myforum

8REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

91 Tue Sep 28 00:53:57 2021 superseded phpbb-12.3.2 3.3.8 Install complete

102 Tue Sep 28 01:25:29 2021 superseded phpbb-12.3.2 3.3.8 Upgrade complete

113 Tue Sep 28 01:26:05 2021 deployed phpbb-12.3.2 3.3.8 Rollback to 1

By now, you should have a good idea of how some of the key features available in Kubernetes, such as scaling and automatic load balancing, work. You should also have an appreciation for how Helm charts make it easier to perform common actions in a Kubernetes deployment, including installing, upgrading and rolling back applications.

捐赠本站(Donate)

如您感觉文章有用,可扫码捐赠本站!(If the article useful, you can scan the QR code to donate))

如您感觉文章有用,可扫码捐赠本站!(If the article useful, you can scan the QR code to donate))

- Author: shisekong

- Link: https://blog.361way.com/helm-scale-rollback/6890.html

- License: This work is under a 知识共享署名-非商业性使用-禁止演绎 4.0 国际许可协议. Kindly fulfill the requirements of the aforementioned License when adapting or creating a derivative of this work.